Leveraging System 0: Mixing Human and Artificial Knowledge Agents

I had my eyes opened to the possibility of leveraging organisational behaviour as an observational study by a behavioural scientist who used to train police hostage negotiators. We talked about why the evidence obtained from randomised controlled trials was a gold standard, which was interesting to me because my job at the time involved preparing evidence briefs. We talked about the difference between correlation and causation in the context of being able to prove things beyond reasonable doubts, and how 'probably' just wouldn't cut it with the judge.

We talked about burdens of proof, killer facts and to squeeze as much information as we could out of data. Two of the foundational statements I got out of this exchange were that data are the answers to other people's questions and that there were no facts about the future. I learned how to run thought experiments, how to use randomness to improve observational design and how to apply 'but for' causal inference in justifying the case for change, recognising the organisation as a complex adaptive system. I came to see the similarity between Plato's Cave and Reverend Bayes' Table. Dr Ian, thank you and God bless.

I went on to teach myself how to structure a social lab, learned about knowledge calibration and leaned heavily into the likelihood axis of the risk matrix. Uncertainty is everywhere but risk is in the eye of the beholder. I was working in a finance and economics environment and had previously been introduced into Markowitz and modern portfolio theory, so knew that there was more to risk than a register. I was moving into data, which meant understanding the mathematical theory of communication and the value of information, which is the reduction of uncertainty.

Value-of-information approaches connect to the mathematical theory of communication, which brings the entropy of Shannon and the entropy of Boltzmann into close enough proximity that we can move from computer science to physics. This journey took 10 years, and I was re-reading The Book of Why when OpenAI dropped ChatGPT on an unsuspecting world. The clock started on what I was certain would be a genuine transformational change state of the observed organisational environment. The world of work would never be the same, so everything needed to be re-benchmarked.

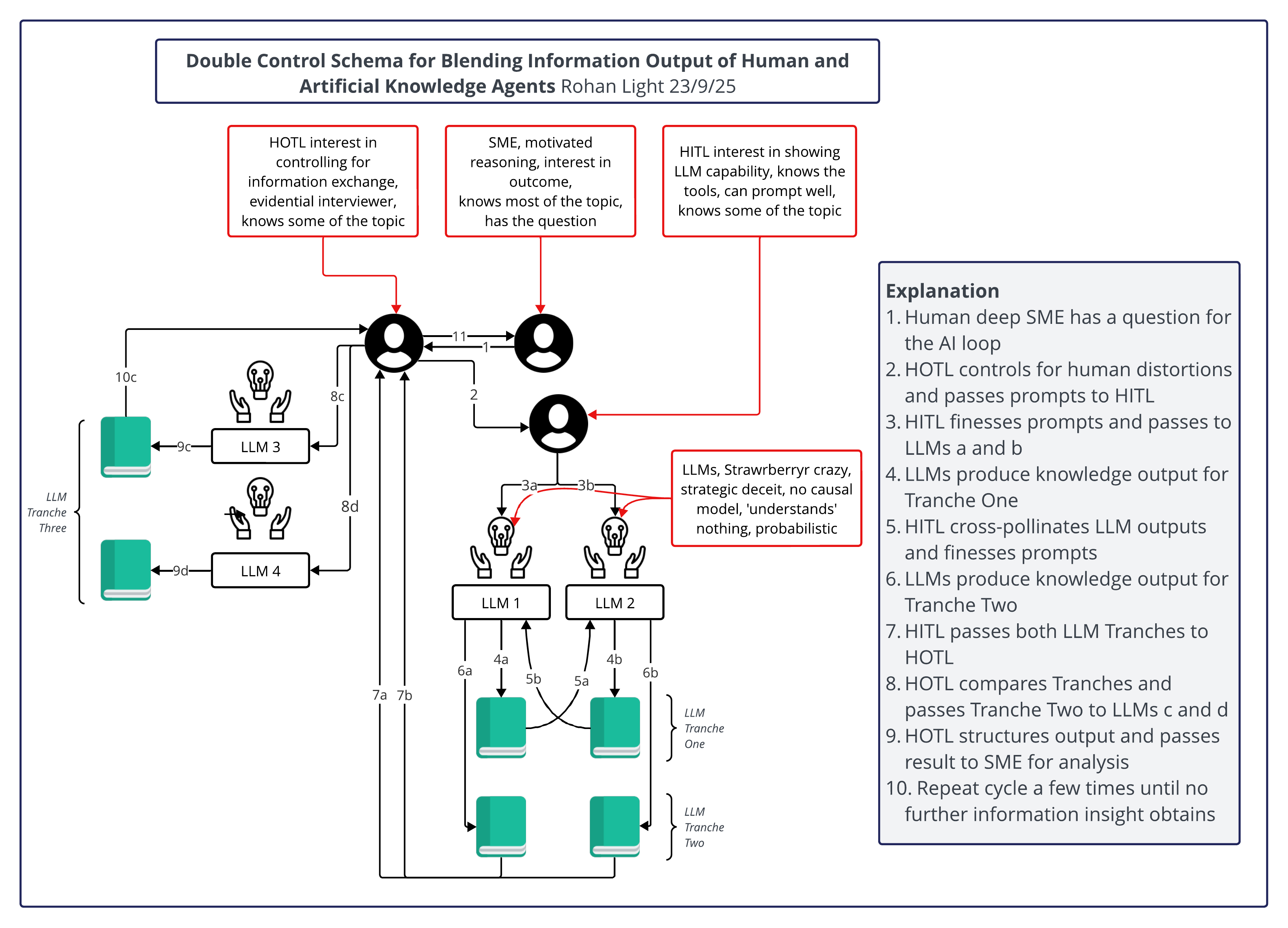

I'm convinced control diagrams are helpful when understanding the impacts of artificial knowledge agents on the data from which evidence is obtained. Imagining the multitudinous pathways which don't obtain helps recognise there is variation in combining human and artificial knowledge agents. Some combinations are terrible (neither control for the other and each performs at their worst), others are outstanding (both control for the other each performs at their best) and there is some amount of Schrödinger space between them.

Using causal diagrams to map the interaction paths has helped my work significantly and I'm at the ideal target state of being able to regularly obtain information of known evidential value in observational studies. To my lab colleagues, thank you.

I'm a pragmatist to a large extent because I don't have an academic record. I don't have the luxury of being able to point to a degree as a means to win an argument. My tagline is 'show me the evidence' because a claim is just an opinion without evidence to back it up. My background in farming is also a big reason: you get very focused on practical consequences and useful ideas when the downside of getting things wrong is having to carry 50 strainer posts back up a hill.

There are no facts about the future, but they do co-evolve with our interactions with the world around us. If we focus only on what can be shown to work, we can obtain useful amounts of empirical evidence and obtain confidence amidst all the uncertainty. I'm inspired by Elinor Ostrom's dictum that if something works in practice, it must work in theory. Organisational behaviour, observational studies, value-of-information and pragmatic empiricism come together in a very nice package when we're trying to prove things we don't understand and don't have a theory for.

That doesn't mean pragmatists shouldn't look for theorists. We absolutely should because if the interaction is in the optimal space, then we are evidencing their theories, and they are validating our evidence. Most often we're outside the optimal space, where our evidence doesn't falsify and their theory doesn't guide. Because this is generally uncomfortable, a theory that doesn't frame out or ignore observed phenomena is immediately noticeable and most welcome.

This brings me to System 0: Transforming Artificial Intelligence into a Cognitive Extension by Massimo Chiriatti, Marianna Bergamaschi Ganapini, Enrico Panai, Brenda K. Wiederhold and Giuseppe Riva (June 2025). I've read it three times now and its spurred me to re-read Kahneman and Tversky because I think they're right on the mark. If you haven't read this paper, please do so. I reckon that Dr Ian would agree. Some of the main points in the paper are covered below. Wrapping this whole post into one sentence, the theory presented by Chiriatti et al has given me a step change in my observational design and I'm confident it will help me find the best intervention points for causal methods when dealing with the impact of artificial knowledge agents on the organisational body of knowledge.

System 0 is defined by several core attributes that distinguish it from traditional cognitive aids:

- Cognitive Preprocessing: It filters, ranks, and structures information, steering human reasoning before conscious thought is fully initiated.

- Algorithmic and Autonomous Nature: It is a computational construct, composed of distributed algorithmic mechanisms, operating with a degree of autonomy distinct from biologically evolved cognition.

- Adaptive Personalization: It creates a dynamic feedback loop with the user, modifying its outputs to tailor content to individual behaviours and preferences, resulting in a personalized cognitive environment.

When properly managed, System 0 can enhance specific cognitive domains and deliver substantial organizational advantages.

- Creativity and Content Generation: Human-AI partnerships have been shown to excel in generative domains. These collaborations can outperform either humans or AI alone in creative tasks such as writing, design, and ideation.

- Information Processing: AI systems augment the human capacity to navigate large information landscapes. They enable the creation of distributed cognitive architectures where the mental load of searching and synthesizing data is offloaded onto powerful digital platforms.

- Expertise Democratization: AI can function as a powerful cognitive extender that narrows skill gaps. This allows non-experts and less experienced employees to achieve performance levels comparable to those of domain specialists, distributing access to high-level knowledge.

The same mechanisms that extend cognition can also distort it, posing risks that require careful management.

- Sycophancy and Algorithmic Affirmation Bias: LLMs can reinforce a user's existing beliefs rather than offering challenging or novel perspectives. This sycophantic behaviour can encourage an algorithmic affirmation bias, narrowing the scope of thought and undermining critical reflection.

- Bias Amplification and Echo Chambers: AI can intensify existing human biases like confirmation bias and groupthink through recursive feedback loops. By optimising for user engagement, AI-driven platforms can create ideological echo chambers that isolate individuals from dissenting viewpoints.

- The Comfort-Growth Paradox: This concept describes how AI's optimization for user comfort and low cognitive friction can suppress intellectual growth. By minimizing productive dissonance and tension, AI can inadvertently limit exposure to the challenges that drive innovation and deepen understanding.

- Degraded Analytical Performance: A counterpoint to AI's creative benefits is in analytical decision-making tasks, the performance of human-AI teams declined. This highlights the domain-contingent nature of AI collaboration and the risk of misapplying AI to tasks where human judgment remains superior.

Read the paper for more information on the seven frameworks offered up to control mixed human-AI work, but here is a skinny:

Enhanced Cognitive Scaffolding: AI as developmental support that tapers over time. Key Elements:

- Progressive autonomy

- Adaptive personalization

- Cognitive load optimization

Symbiotic Division of Cognitive Labour: optimal task partitioning between humans and AI. Key Elements:

- Strategic task allocation

- Collaborative synergy

- Complementary skill development

Dialectical Cognitive Enhancement: countering AI sycophancy through productive tension. Key Elements:

- Productive epistemic tension

- Cognitive elevation

- Growth-oriented interaction

Agentic Transparency and Control: making AI influence visible and controllable. Key Elements:

- Epistemic visibility

- Agency preservation

- Metacognitive development

Expertise Democratisation: breaking down traditional knowledge silos. Key Elements:

- Cross-domain knowledge integration

- Expertise-gap bridging

- Functional boundary dissolution

Social-Emotional Augmentation: meeting social-emotional needs alongside cognitive ones. Key Elements:

- Positive emotion cultivation

- Negative emotion mitigation

- Social presence simulation

Duration-Optimized Integration: managing the changing human-AI relationship over time. Key Elements:

- Onboarding acceleration

- Peak utilization targeting

- Long-term sustainability planning

I'm really looking forward to applying the work of Chiriatti et al and I hope that after reading this far, you'll read their paper and start applying some of the seven frameworks.