Explained but not understood

(An exploratory essay; last updated 4 December 2020)

When we lose track of whose version of a story to trust, paranoia ensues. It seems no coincidence that we find ourselves in an epistemological crisis several years into what is frequently described as a “crisis in the humanities”, the very subjects that devote themselves to epistemological systems: language, literature, history, philosophy. The destruction of epistemological foundations creates the crisis in knowledge

Sarah Churchwell, Can American democracy survive Donald Trump?, the Guardian, 21 November 2020

What does it mean to write effective data governance policy for artificial intelligence (AI) and automated decision systems (ADS) in the midst of a ‘crisis in knowledge’? If we do the best possible job with just parts of the system, doesn’t it just end up in exposing the unfairness, powerlessness and unfreedom in the wider system? How much can we actually affect?

The XAI body of work is a promising pathway to helping restore a sense of democratic citizenship in our modern world. Yet explainability appears to be an asymptote: we keep approaching it but never seem to arrive. We know how to explain many elements of AI and ADS but we still struggle to be understood.

Epistemology is from Ionic Greek epistasthai "know how to do, understand," literally "overstand," from epi "over, near" + histasthai "to stand," from PIE root *sta- "to stand, make or be firm." The scientific (as opposed to philosophical) study of the roots and paths of knowledge is epistemics.

via Etymonline.

(Interesting how epistemology is about overstanding, the opposite of understanding. What does this mean for standover tactics I wonder)

Man stands face to face with the irrational. He feels within him his longing for happiness and for reason. The absurd is born of this confrontation between the human need and the unreasonable silence of the world.

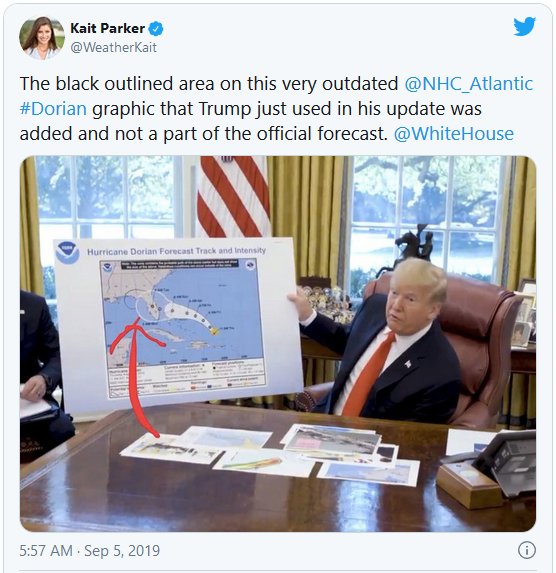

An epistemological crisis makes for a depressing world to live in, but it seems to be the one we’re in. For many data governance professionals it’s also an existential challenge: we feel compelled to keep going and refuse to give up whenever the magic Sharpie miraculously changes the path of a hurricane.

As our profession recovered from the shock of Cambridge Analytica, the ease with which elections were influenced and democratic norms ignored, we redoubled our efforts to do the best job we could to explain AI and ADS to our fellow citizens. We took strength that we are so blessed with scores of excellent colleagues identifying the things to do and not do.

And yet still ended up having to explain the insanity of QAnon finding representation in the corridors of government and an outmatched executive who wondered aloud whether drinking bleach might help combat a respiratory epidemic. Part of the jarring dissonance is that we're surprised this doesn't seem weirder to more people, that we're having to choose whose story to trust .

There is art at play here, just not of the kind we might otherwise expect. There is art in words as tens of thousands of best selling authors can attest. We're dealing with something more slippery than logic. What's more, we might just have the initial frame completely wrong.

While the popular image of the conspiracy theorist is of a lone wolf piecing together puzzling connections with photographs and red string, that image no longer applies in the age of social media. Conspiracy theorizing has moved online and is now the end-product of a collective storytelling. The participants work out the parameters of a narrative framework: the people, places and things of a story and their relationships

(My emphasis in bold)

Conspiracy thinking uses protestations to the contrary as evidence of the conspiracy. Denouncing conspiracy thinking and its ilk aids its spread, where an unanswered question about vaxxing puts people on a soft recruitment path to extremism.

... most anti-vaxxers do not start out as outright science deniers. They become more polarized and fall into the trap of profiteers when they seek confirmation from echo chambers after their fears are dismissed and ridiculed

(Note the pre-covid coverage of dis/misinformation issues before we experienced the impact of scale)

So, what do we do now? Write more words? Craft more principles? Host more webinars? Shake/break more lib heads? All the above and less besides.

We’ve arrived at the point where seeking to explain AI and ADS to ourselves ends up trying to explain ourselves to ourselves... and discovering that a large proportion of our fellow selves d/won’t get it, d/won’t care or both. This is what an epistemic crisis looks like.

Doing more of what we are currently doing will give us more of what we already have. We cannot in good conscience do nothing. So where to start? A bigger helping of empathy goes a long way,

My collaborators and I suggest that three broad psychological needs underlie conspiracy beliefs: the need to understand the world; to feel safe; and to belong and feel good about oneself and one’s social group.

Aleksandra Cichocka, To counter conspiracy theories, boost well-being, Nature, 10 November 2020

That seems to be a very human assessment. And the word ‘feel’ appears to be more important than ‘understand’. Which, as we discovered earlier, is different from 'overstand' and thus implicated in our crisis of epistemology.

My sense is our task is to put less time into eloquent arguments to ourselves and focus instead on the majority of people who find themselves caught up in the midst of what now seems to them to be a truly vast web of AI and ADS. But how to do so when explainability is an asymptote?

Many of the people we’re talking to don’t need to be told that they lack a voice, have little power and are the ones who foot the bill. They know it already. They see it every day and know their children and children’s children are probably going to still be seeing it in the decades to come.

When we set to explaining AI and ADS, policy makers would do well to consider how to approach the dialogue. I mean dialogue instead conversation, because a dialogue involves the exchange of question and answer until but one question remains. Then the parties answer the question, which here is about the boundary between explanation and understanding, in the midst of a crisis in overstanding.

Words only get us so far. But we know this already.

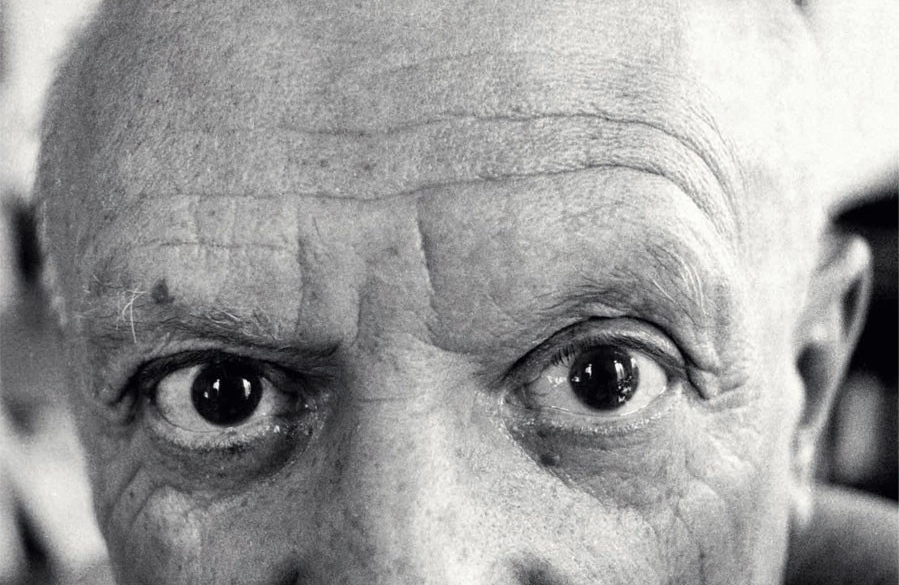

We have learned nothing in twelve thousand years

Pablo Picasso, after viewing the Lascaux cave art

Pictures are a different kind of story. They are worth a thousand words as we know. And there is skill in using pictures to describe and explain. It’s called art.

While it might not form part of the conventional policy makers toolkit, it probably should be. We know that the humanities are becoming increasingly necessary in good data science work. It’s not too much of a stretch to see history and criticism of art techniques making their way into explainability and other science communication work.

Consider for a moment the vexed issue of regulating, governing or forming policy for facial recognition technology. It’s a wicked problem to be sure because we know that it will be of great assistance in many areas, and also that its current applications are sufficiently flawed that responsible jurisdictions are calling a halt until the (many) bugs get ironed out.

Leaving aside for now the haruspex pseudo-science of affective computing (look into the screen and the computer will tell you how you're feeling), how should we approach this policy problem?

Who sees the human face correctly: the photographer, the mirror, or the painter?

Pablo Picasso

Recognising that data is socially constructed (it doesn’t exist separate from our desire to obtain it - we don’t go to the data tree and pick some data fruit), all data comprises a point of view, a perspective. That sounds familiar because it’s the same with art. Two people can look at the same piece and one sees the sublime where the other sees expensive rubbish.

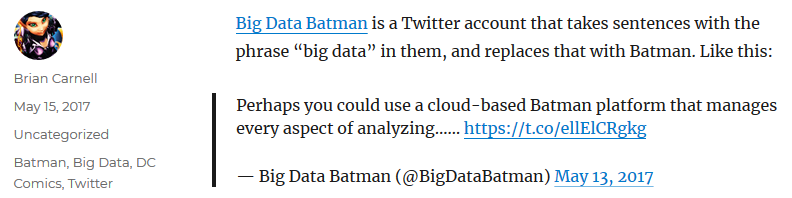

A great technique to help pop the mystique bubble that accompanies technology, data and AI is to follow the lead of the (now suspended) Twitter account Big Data Batman.

It’s a useful technique to highlight the buzzword bingo absurdism that underlies so much of tech-speak and presents a huge obstacle to the explained-but-not-understood conundrum.

We can do it with AI and art. Reflect on this

A picture is not thought out and settled beforehand. While it is being done it changes as one's thoughts change. And when it is finished, it still goes on changing, according to the state of mind of whoever is looking at it. A picture lives a life like a living creature, undergoing the changes imposed on us by our life from day to day. This is natural enough, as the picture lives only through the man who is looking at it

Pablo Picasso

An AI is not thought out and settled beforehand. While it is being done it changes as one's thoughts change. And when it is finished, it still goes on changing, according to the state of mind of whoever is looking at it. An AI lives a life like a living creature, undergoing the changes imposed on us by our life from day to day. This is natural enough, as the AI lives only through the [person] who is looking at it

Rohablo Picasso

Hmmm. The second paragraph opens unexpected doors for inquiry. We may discover something yet.

Do we want to create a society where the person lives only through the AI who is looking at them? Hopefully most readers will recoil at that, and rightly so. Yet it is the world we seem to be building one badly conceived facial recognition ADS at a time.

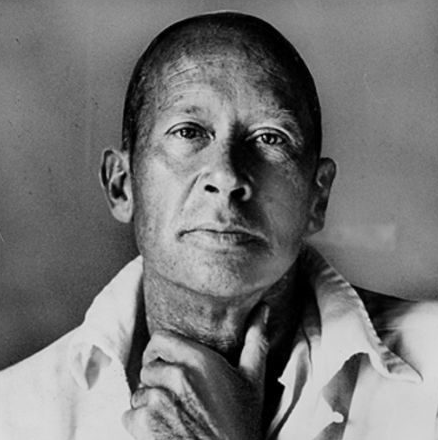

How might an artist show this?

This is how people felt about powerlessness as the 70s became the 80s, before our present algorithmic age. It might also be what we happen to look like through an imperfectly ground AI lens. And the AI view can't see that it's actually a sunny day and we're smiling in greeting.

Dehumanisation is one reason why AI and ADS policy writing is taking on such significant social and democratic implications and, in turn, asking questions of the cognitive and experiential diversity in our policy making.

People resist the commodification that the extension of data driven systems forces upon them because the alternative doesn’t bear thinking about.

To be nobody but yourself in a world which is doing its best, night and day, to make you everybody else means to fight the hardest battle which any human being can fight; and never stop fighting.

e.e. cummings, via Brain Pickings

There’s a lot of techno-rage in the world and much of it is being concentrated in protests against injustice, unfreedom and powerlessness. As I sense Picasso recognised upon exiting Lascaux, these feelings have for millennia been expressed by people in art, because it affords such a direct pathway to protest. And, crucially, it doesn’t need a thousand words.

In this sense, more words won’t help. Recall when you are scanning your media feeds deciding what to read and you see an article with an interesting headline but beneath it there is an italicized ‘12 minute read’. 'Can’t be bothered' is a common response and we jump over to Insta because it takes a lot less work.

When we’re writing policy for AI and ADS, ask how we might show rather than tell. If we want to explain something, we use science, but to understand something use art. We need both science and art in the room to help prepare for things like quantum computing.

We should count art history and critique as legitimate techniques to better understand how to approach thorny issues of AI and ADS strategy and policy. There's no data science without science. No science without a theory of change. No theory of change without a theory of power. And art is an effective means of critiquing power dynamics.

There is a role for formalism, but there is also a role for imagination, intuition and adventure. Maybe it’s not about how many qubits we have; maybe it’s about how many hackers we have.

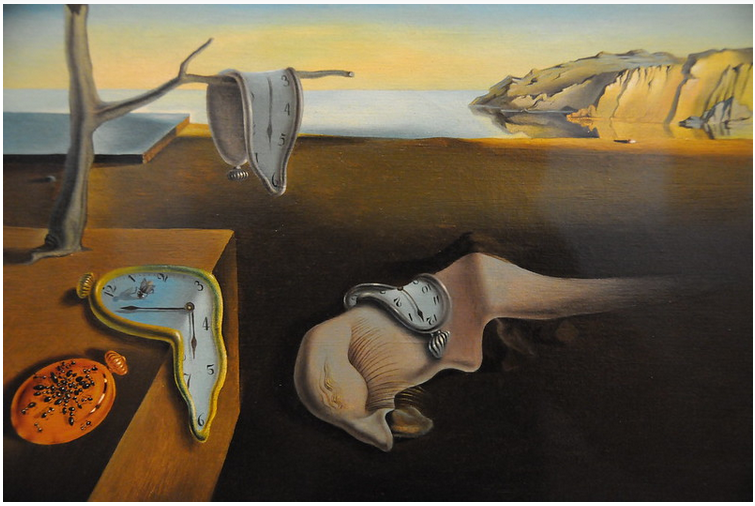

Sometimes there truly is no more rational way of putting something into words, where we reach the end of a medium's usefulness. Because of the probabilistic nature of data, we will find ourselves in the world of physics, exploring strange places and strange ideas, such as temporality.

Temporality (n.) late 14c., "temporal power," from Late Latin temporalitas, from temporalis "of a time, but for a time"

How might an artist show this?

via Etymonline.

Our lives are not driven by rational arguments. Reason helps us to clarify ideas, to discover errors. But that same reason also shows us that the motives by which we act are inscribed in our intimate structure as mammals, as humans, as social beings: reason illuminates these connections, it does not generate them. We are not, in the first place, reasoning beings. We may perhaps become so, more or less, in the second. In the first instance, we are driven by a thirst for life, by hunger, by the need to love, by the instinct to find our place in human society… The second instance does not even exist without the first. Reason arbitrates between instincts but uses the very same instincts as primary criteria in its arbitration

Carlo Rovelli, The Order of Time, Penguin Press, 16 April 2019

Sounds like art to me. And, as it turns, we’re surrounded by art in our professional lives. Data viz specialists dedicate their careers to it. Good design, well conceived and executed, produces management art and everyone who’s suffered through an all day meeting can recall those moments when a great piece of design appears on the screen and we just ‘get’ it. That’s the power of art, managementally expressed.

The society for whom we’re writing AI and ADS policy is the same. Except they are less confined by dogma and doctrine. Furthermore, we see them communicating to the rest of us via the art of the street.

Millions of people get a thrill when the latest Banksy street art appears, challenging norms and speaking their truth to power.

And in a delicious twist, we now have GANsky

... a twisted visual genius whose work reflects our unsettled times… [who] learns about the structures and textures in the works [of Banksy], looking for common patterns and themes and then tries to recreate that effect itself

Kabir Jhala, An AI bot has figured out how to draw like Banksy. And it's uncanny, the Art Newspaper, 23 October 2020

The relationship between Banksy and GANsky is emblematic of the deep seated challenges that AI and ADS pose to society and policy makers alike. Art crashes logic and it’s in the tidying up where data governance professionals can make the leaps we need to make in order to deliver our mission.

We seek to explain AI and still it is not understood. And yet, as we look closer at the photograph, mirror or painting, we may see it is we, the policy makers, who do not understand.

Photo by Mark McCammon from Pexels