Claims that can't be tested are worthless

I have to remind myself what theory of change (ToC) is every time I go to use it. There's something vague about it that's unsettling. That sense of vagueness is uncertainty. We can reduce uncertainty through measurement, which has a close relationship with taking action.

We need to be intentional with the actions we take. They need to make sense in the context of our target future state. Without which effective strategy can't be constructed. So, we use ToC to inform and improve our action taking, measurement and progress.

Our actions generate data, either actively or passively, and demonstrate that we have decided upon something. The difference between intention and decision is action. It is action that 'proves' the decision. If there is no action, then all we have is intention. The distinction is important and helps us differentiate facts from fiddle faddle.

Intention is necessary but not sufficient for decision making under uncertainty. Without intentionality we govern and manage by magic 8 ball. With intentionality we have a planet full of good ideas, but good ideas by themselves don't get us far. It is action that helps us separate the two. None of which is particularly earth shattering, novel or unique,

In art intentions are not sufficient and, as we say in Spanish: they must be proved by facts and not by reasons. What one does is what counts and not what one had the intention of doing

(Pablo Picasso in 1923, per Fifty Years of His Art by Alfred H. Barr Jr, 1980) https://assets.moma.org/documents/moma_catalogue_2843_300061942.pdf

Facts, not reasons are what we're after. And we shouldn't be too bothered about taking data science advice from an artist. It helps pop our big data ego bubbles, (which will reform every time they're popped anyway). Proving things by reasons is a big reason we can't tell facts from fiddle faddle.

This is why I search for techniques that control for wishful thinking. Hence my current fascination with ToC approaches. We can use the ToC to inform and improve our action taking, measurement and progress. It helps us create effective feedback loops within teams, which improves our ability to learn our way forward, which helps us observe and understand our environment.

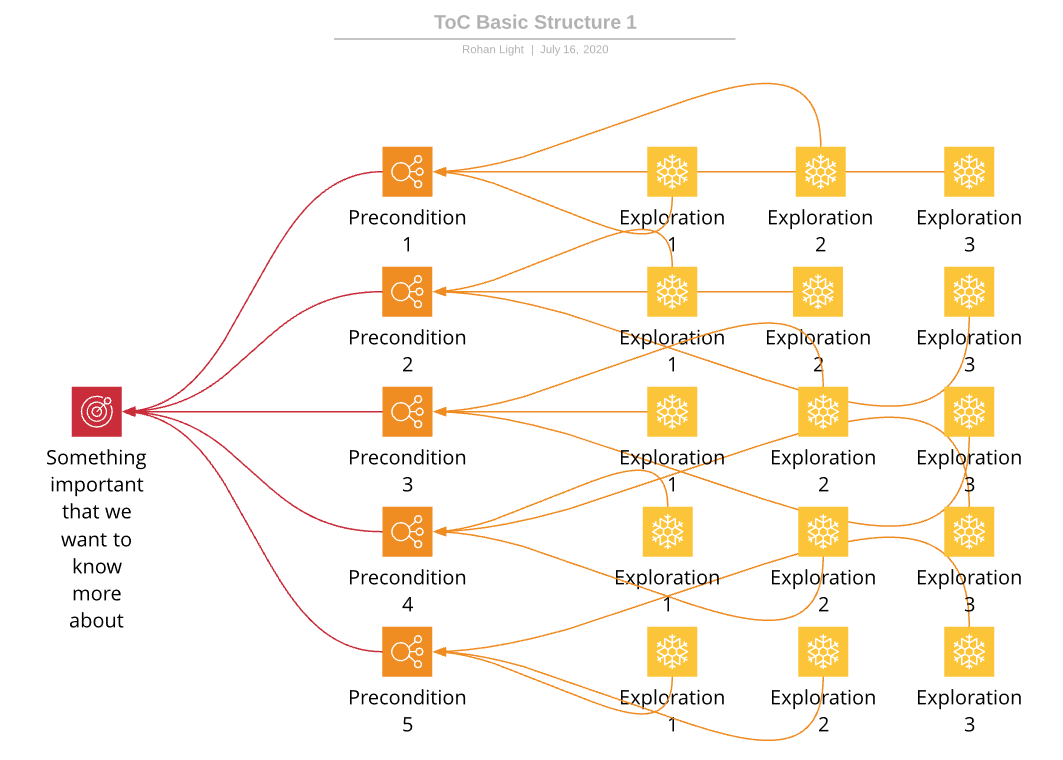

This is, as I wrote last post, where a well specified human centred design (hcd) + agile methodology comes in. Such a sense-making methodology will create data of known quality (as long as we don't muck about with the methodology, which is how we end up 'fauxgile' and 'fragile' rather than agile). But we are still left with the problem of where to point our little sense making instrument.

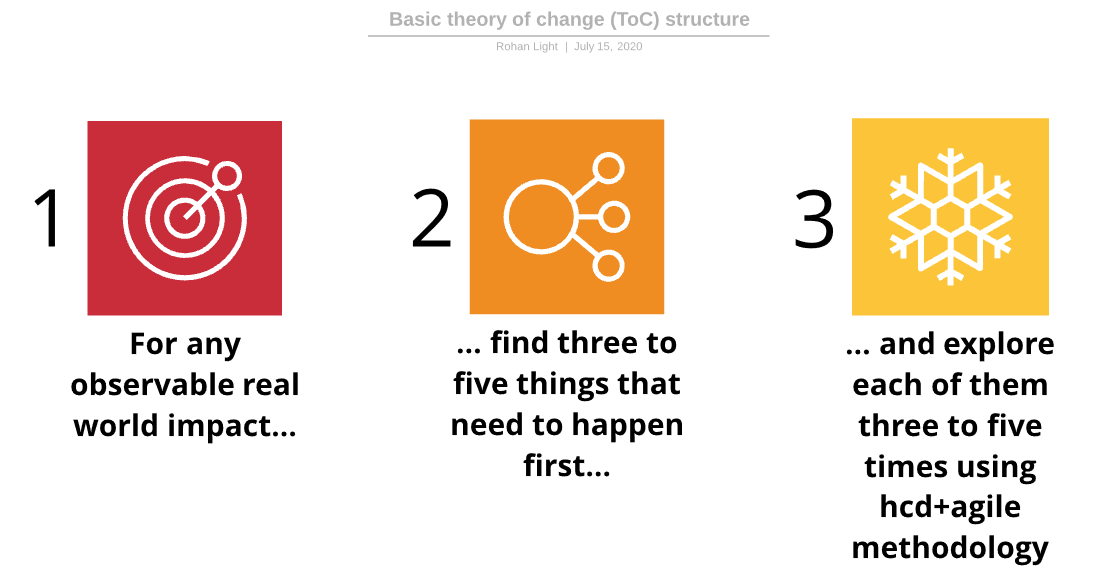

This is where the uncertainty approach comes in and I'm covering that over this series of posts. The ToC helps us get clearer with where we're going by reducing our uncertainty and giving us a breadcrumb trail towards meaning and understanding. Here's the ToC definition I'm using,

a theory of how and why an initiative works, which can be empirically tested by measuring indicators for every expected step on the hypothesized causal pathway to impact

(De Silva, M.J., Breuer, E., Lee, L. et al. Theory of Change: a theory-driven approach to enhance the Medical Research Council's framework for complex interventions. Trials15, 267 (2014). https://doi.org/10.1186/1745-6215-15-267).

This needs a bit of grounding,

The 'observable real world impact' piece is important down the track. At a deeper level it helps control for issues like Russell's Teapot and Sagan's Invisible Dragon,

"Now, what's the different between an invisible, incorporeal, floating dragon who spits heatless fire and no dragon at all? If there's no way to disprove my contention, no conceivable experiment that would count against it, what does it mean to say that my dragon exists? Your inability to invalidate my hypothesis is not at all the same as proving it true. Claims that cannot be tested, assertions immune to disproof are veridically worthless, whatever value they may have in inspiring us or in exciting our sense of wonder. What I'm asking you to do comes to believing, in the absence of evidence, on my say-so"

(Carl Sagan, The Demon-Haunted World: Science as a Candle in the Dark, 1995)

At a more practical level, focusing on 'observable real world impact' makes sure we stick with empiricism and avoid straying into magical thinking. But an observable real world impact isn't enough. As Paul Smaldino wrote in Nature, better methods can't make up for mediocre theory,

Who cares if you can replicate an experiment that found that people think the room is hotter after reading a story about nice people? Will this help us to develop better theories? You can craft a fun story about that result, but can you devise the next great scientific question?

(Paul Smaldino, Better methods can't make up for mediocre theory, Nature, 2019)

One thing I've observed across all business units I've worked with over the last few years is a difficulty to separate the trivial from the meaningful. Part of this is driven by measurement inversion (we measure what is close or easy, not what helps us understand the world around us).

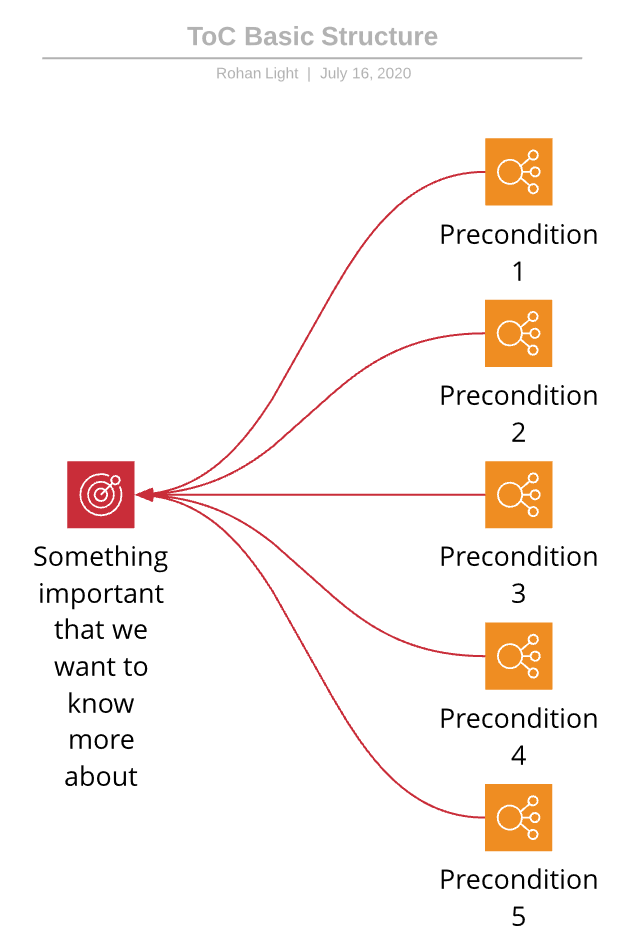

So we need to focus our ToC on the meaningful,

'Something important that we want to know more about' is good enough for now. It's a simplification of the full phrase I use: "a relationship we think is important but we don't know enough about". This is the door to the uncertainty approach to measurement, which we need to keep open for as long as possible. Why this is will become apparent later on.

Once we focus our attention on something important we want to know more about, we are able to control for one of the fundamental problems in data and analytics. Patrick Leach summed this up in one simple title in his book "Why Can't You Just Give Me the Number?". I see this all the time: the deep frustration people who don't speak data have with people who do.

They want the answer, without giving thought to the question or (more to the point) giving thought to the question-posing process. Picasso again identified the problem when he said "Computers are useless. They can only give you answers". Someone asked me once why there wasn't any data on a particular subject: I answered because no-one had bothered to ask the question and systematically explore possible answers. Once we start asking questions, we start gathering data.

Curiosity is the fundamental human quality here, not building prescriptive analytics capability, which emerges from technological solutionism (for a topical look at tech solutionism, check out Eugene Morozov's article on the covid-19 app delirium). So let's just get better at asking questions (about something important we want to know more about).

I digress. If we want to know more about something, we must also be wanting to reduce our uncertainty about that something. And as long as we stay in this space, we are able to control for all sorts of decision error while keeping our attention locked onto the meaningful instead of the trivial.

But, how are we going to reduce our uncertainty? By taking action through exploration and enquiry. Which takes us back to the hcd+agile methodology,

This post is too long already, so I'll stop here. But not before I foreshadow where this is heading: analytics leads to dialectics. The basic reason for this is that data is socially constructed and evidence is politically situated (or more simply, we make the data and there's nothing preventing others from completely ignoring it).